If there's one thing I find helpful about Jonathan Chait's work, it's that every now and then he gives us a

"State Of The Reformy" piece that serves as a useful encapsulation of the current arguments among the neoliberal set for education "reform."

Chait's piece this time is especially notable because it's an explicit attempt to distance the Obama administration's education policies from those being pushed by the conservatives who have been emboldened by Donald Trump's win and, subsequently, Secretary of Education Betsy DeVos's rise to power.

Desperately, Chait wants to convince us that the agenda pushed by Arne Duncan and John King, Barack Obama's SecEds, represented some sort of middle ground between the hard-right's dream of privatizing education, and the left's indifference to the "failure" of American schools (allegedly a direct result of the vast influence and vast perfidy of teachers unions).

Chait's political argument is so silly it's almost not worth addressing: thankfully,

Peter Greene, once again, does most of the work so I don't have to. The fact is that Chait makes sweeping generalizations about the left (and, for that matter, the right) that are absurd [emphases mine]:

"Left-wing policy supports neighborhood-based public schools, opposes any methods to measure or differentiate the performance of teachers or schools, and argues instead for alternatives to school reform like increased anti-poverty spending or urging middle-class parents to enroll their children in high-poverty schools."

And:

"Unions that oppose subjecting their members to any form of measurement joined forces with anti-government activists on the right to protest Common Core and testing."

Chait, of course, gives no examples of unions not wanting to hold teachers accountable for their practice -- because the notion is nonsense. The unions have never --

never -- held the position that bad teachers should be allowed to continue to teach without any remediation or consequence. What they have insisted, quite correctly, is that there be due process in place as a check against abuses of power, so that the

interests of students and taxpayers, as well as teachers, can be protected.

Now, as much fun as it might be to knock down all of Chait's straw men, I'd like to instead focus on something else from his piece. Because Chait, like all education policy dilettantes, likes to dress up his arguments with references to education research -- specifically, research conducted by economists. Throughout his piece Chait includes links to a variety of econometric-based research, all purporting to uphold his claims for the efficacy of reformy policies.

I have no problems with economists. Literally, some of my favorite people in the world are economists. And I enjoy a good regression as much as the next guy. Useful work has been done by economists in the education field. I can honestly say my thinking about things like charter schools and teacher evaluation has been shaped by my study of econometric research into those topics.

However...

It has been my observation over the years that economists working in education have not been as forthcoming as they should about the limitations of their work. And this has led to pundits and policymakers, like Jonathan Chait -- and, for that matter, Arne Duncan and John King -- to draw conclusions about education "reform" that are largely unsupportable.

Chait's piece here is an excellent example of this problem. So allow me to take a pointed stick and poke it into the econometric beehive; here are some things everyone should understand about recent research on things like charter schools and teacher evaluation that too many economists never seem to get around to mentioning.

* * *

- Charter school lottery studies are not "perfect natural experiments." The economists who conduct these studies are often quite eager to tout them as "

exactly the research we need" to make policy decisions about the effects of charter school proliferation. I am here to tell you in no uncertain terms: they are not.

The theory behind charter lottery studies is that the randomization of the lottery controls for all unobserved (better understood as

unmeasured) differences between students that might account for differences in the effects being studied. In the case of charter schools, we might assume (

quite correctly) that different parents approach enrolling students into charters in different ways.

Parents who care more about their child's outcomes on tests, for example, may be more likely to enroll their child in a charter school if their local public school has low test scores. These parents may be more diligent about making sure their child completes homework or attends school, which could lead to higher test scores.

The economists who conduct these studies are assuming, because assignment to charter schools is random in lotteries, that the differences in these unobserved characteristics of students and their families will be swept away by their experiment. There are

some other assumptions built into this framework, but it's generally a reasonable theory...

Except it only applies to students who enter the lottery. If students who enter charter lotteries under one set of conditions differ from students who enter other another -- and there is

plenty of reason to believe that they do -- we can't generalize the findings of a charter lottery study to a larger population. In other words: even if we find an effect for charter schools, we can't know that effect will be the same if we expand the system.

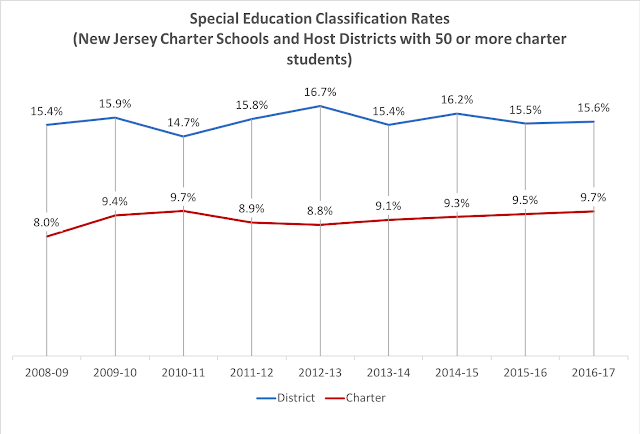

Further, we can only generalize the results of lottery studies to charter schools that are popular enough to be oversubscribed. In other words: if there's no lottery because student enrollment is low, we can't conduct the experiment. In addition, we are starting to get some evidence that charters, which have redundant systems of school administration and often can't achieve

economies of scale, are putting

fiscal pressure on their hosting public district schools.

While these points are sometimes mentioned in the academic, peer-reviewed papers based on these studies, I rarely see them acknowledged when economists discuss these studies

in the popular press. Nor do I see any discussion of the fact that...

- Studies of education "reforms" often have very fuzzy definitions of the treatment. The treatment is, broadly, the policy intervention we care about. For charter schools, the treatment is taking a school out of public district control and putting it under the administration of a private,

non-state actor entity. The problem is that there are often differences between charters and public district schools that are

not what we care to study, and these differences often get in the way of the things we do care about.

Here's a completely hypothetical pie chart from

an earlier post. Let's imagine all the differences we might see between a charter school and a counterfactual public district school.

We know charter school teachers generally have

a lot less experience than public district school staff, which makes staffing costs cheaper. There is almost certainly a

free-rider problem with this, leading to a fiscal disadvantage for public district schools. But that's good for the charters: they can extend their school days and still keep their per pupil costs lower than the public schools.

But is this a treatment we really want to study? Shouldn't we, in fact, control for this difference if what we want to measure is the effect of moving students to schools under private control? Shouldn't we control for peer effects and attrition and resource differences if what we really care about is "charteriness"?

The economists who conduct this research often refer to their treatment as "No Excuses." What they don't do, so far as I've ever seen, is document the contrast the implementation of their treatment between charter schools and counterfactual public district schools. In other words: do we really know that "No Excuses" varies significantly between charters and public schools?

A lot of the research into charter school characteristics is, frankly, cursory.

Self-reported survey answers with a few videos of a small number of charters in one city is not really enough qualitative research to give us a working definition of "No Excuses" -- especially when there's no data on the contrasting schools that supposedly

don't adhere to the same practices.

So, no, we can't attribute the "success" of certain charter schools to their practices or organization -- at least, not based on these econometric studies. And we really need to step back and think about what we're using to define "success"...

- Test scores have inherent problems that limit their usefulness in econometric research. I keep a copy of

Standards for Educational and Psychological Testing within arms length when I review education research that involves testing outcomes. And I have Standard 13.4 (p. 210) highlighted:

Evidence of validity, reliability, and fairness for each purpose for which a test is used in a program evaluation, policy study, or accountability system should be collected and made available.

This is a process that has been largely ignored in much of the econometric research presented as evidence for all sorts of policies. The truth is that standardized tests are, at best, noisy, biased measures of student learning. As Daniel Koretz points out in his excellent book,

The Testing Charade, it is quite easy to improve test scores by giving students strategies that have little to do with meaningful mastery of a domain of learning.

Koretz also notes that multiple charter school leaders have explicitly said that improving test scores is the primary focus of their schools' instruction. As

Bruce Baker and I note, there is at least some evidence that these improvements came at the expense of instruction in non-tested domains. That lines up with a

body of evidence that suggests increased accountability, tied to test scores, has

narrowed the curriculum in

our schools.

I'm the last person to say we shouldn't use test scores to conduct research. But when the test score gains that econometric research shows are marginal, we should all stop and consider for a bit whether we're seeing gains that represent real educational progress. And many of these studies show gains that are quite marginal...

- Compared to the effects of student background characteristics -- especially socio-economic status -- the effect sizes of education "reforms" are almost always small. Student background characteristics are by far the best predictors of a test score. We know for a fact that

poverty greatly affects a

child's ability to

learn.

The claim of reformers, however, is often that education can be a great equalizer, leading to more equitable outcomes in

social mobility. Over and over, the charter sector has claimed they are "closing the achievement gap," implying the education they offer is

equivalent to the

leafy 'burbs and that their students, therefore, are overcoming the massive inequities built into our society.

I made this chart

a while ago, which compares the effects of charter schools, as measured by the vaunted CREDO studies, to the 90/10 income achievement gap:

The income achievement gap has actually

been growing over the years; it now roughly stands at 1.25 standard deviations.

No educational intervention I have seen studied using econometric methods comes close to equaling this gap. As

Stanley Pogrow notes, economists seem to be all too happy to have the effect sizes they find declared

practically meaningful, when often there is little to no evidence to support that conclusion.

One of the arguments made by some researchers is that these effects are cumulative: the intervention keeps adding more and more value to a student's test score growth, so that, eventually, Harlem and Scarsdale meet up. Except, as

Matt DiCarlo points out in this post, you really shouldn't do that -- at least, you should only do so after pointing out that you're only making an extrapolation.

This is often where economists get into trouble: trying to translate their effects into more understandable terms...

- The interpretation of effect sizes into other measures, such as "days of learning," is often highly questionable. The CREDO studies have led the way in translating effect sizes into layman's terms -- with indefensible results. As I've

pointed out previously, the use of "days of learning" in this case is wholly invalidated, if only because there is no evidence the tests used in the research have the properties necessary for conversion into a time scale. And the documentation of the validation of this method is slipshod -- it's really just a bunch of links to studies which in no way validate the conversion.

Recently, a study came out about interventions in Newark's schools and their effects on test scores. As I note in

the review I did with Bruce Baker (p. 27), the effect size found of 0.07 SD was compared to "

the impact of being assigned to an experienced versus novice teacher." But that comparison was based on a single study, by one of the authors, which compared teachers in Los Angeles who had no experience to those who had two years. This is hardly enough evidence to make such a sweeping statement.

In another interpretation, 0.07 SD moves test scores for a treatment group from the 50th to the 53rd percentile. Small moves like these are very common in education research...

- The influence of teachers on measurable student outcomes is practically small. I am a teacher, and I think what I do matters. I think I make a difference in the lives of my students in many ways, most of which can't be quantified.

But in the aggregate, there is little evidence teachers have anywhere near the effect on student outcomes as out-of-school factors. As the

American Statistical Association notes, teachers account for somewhere between 1 and 14 percent of the variation in test scores -- and we're not even sure how much of that is really attributable to the teacher.

One study that is cited again and again to show how much teachers matter is

Chetty, Friedman, Rockoff. It's a very clever piece of econometric work, but in no way does it show that having a "great" teacher will change your life. Its effects have been run through the

Mountain-Out-Of-A-Molehill-Inator to make it appear that teacher quality can have a profound influence on students' income later in life. But what it really says is that you'll earn $5 a week more in the NYC labor market when you're 28 if you have a "great" teacher (the effect if you were 30 is

not statistically significant).

Am I the only one who is underwhelmed by this finding?

* * *

Again: I have no objection to using test scores as variables in quantitative research designs. I will be the first to say there is evidence that policy interventions like charter schools in Boston or teacher evaluation in Washington D.C.* show some modest gains in student outcomes. It's valuable to study this stuff and use it to inform policymaking --

in context.

But simply showing a statistically significant effect size for a certain policy is not enough to justify implementing it. Some economists, like

Doug Harris in this interview, make a point of stating this clearly. In my opinion, however, what Harris did doesn't happen nearly enough -- which leads to pieces like Chait's, where he clearly has no idea about the many limitations of the work he cites.

The question is: Whose fault is that?

Have the researchers who inform our punditocracy's view of education policy done enough to explain how those pundits should be interpreting their findings?

Chait and others like him have the final responsibility to get this stuff right. But economists also have a responsibility to make sure their work is being interpreted in valid ways. I respectfully suggest that it's time for them to start taking some ownership of the consequences of their research. Explaining its limits and cautioning against overly broad interpretations would go a long way toward having better conversations about education policy.

* What they

don't show is that student learning improved after a new teacher evaluation system was put in place. More on this later...